Ivo Pilving

Chairman of the Administrative Law Chamber of the Supreme Court, University of Tartu Associate Professor in Administrative Law

Monika Mikiver

Adviser in the Public Law Division of the Ministry of Justice, Doctoral student at the University of Tartu Faculty of Law

Artificial intelligence applications used in the private sector „fourth industrial revolution“ are now increasingly finding their way into public sector offices. Estonia also has ambitions to use robots or kratts**, more widely in public administration to support or replace officials.[1] Public sector kratts have received rather cursory academic attention,[2] even though the legislator has granted in some fields (tax administration, environmental fees, unemployment insurance) authorization for automated administrative decisions already in 2019.[3] There are plans to present Riigikogu with a bill by June of 2020 that, if adopted, would introduce the necessary changes into existing legislation, including the Administrative Procedure Act, to allow for wider use of artificial intelligence.[4]

There has been talk in Estonian media about automating pension payments and unemployment registration.[5] It is worth noting that not all e-government solutions are based on artificial intelligence and many are just simpler, automated forms of data processing. True, according to the principles of administrative law, if such human-guided solutions malfunction, they may also be transgressing against the law. The real challenge, however, is legislating artificial intelligence, especially self-learning algorithms. With sloppy or malicious implementation, kratts may easily defy the rules of fair procedure, break the law or treat people and businesses arbitrarily. Robots cannot explain their decisions yet.

In order for our kratt project to succeed without exposing society or businesses to grave risks, the development of e-government must understand the nature of machine learning as well as its impact on administrative and judicial procedures.[6] Acting rashly in this field is tantamount to exercising governmental authority according to a horoscope. It would be naïve and dangerous to let ourselves get overcome by the illusion of a kratt that can now or will soon be able to engage in reasoned debate or comprehend the content of human language, including legal texts. Proper implementation of the law requires both rationality as well as a true understanding of the law. If risks are perceived and considered and algorithms are used for proper operations, there will be plenty of work for them in public administration and they can be beneficial to both efficiency and quality in decision making.

To keep focus in this article, we will not broach questions of data protection,[7] even though there is important commonality here when it comes to administrative law. We will consider algorithms regardless of whether decisions are made about humans or legal entities and whether these are based on personal or other data. The breadth of this article doesn’t allow us to go in depth into the issue of equality. This article aims to give the reader some examples of the use of robots in public administration (1), attempts to explain the technical nature of algorithmic administrative decisions (2) and finally examines the operation of the principles of Estonian administrative law in this type of decision making (3). The main question posed by this article is if and when can a kratt be taught to read and follow the law as the legitimacy of governmental authority cannot be sacrificed to progress.

1. Algorithms in Public Administration

Artificial intelligence enthusiasts both in Estonia and abroad have pointed out that the implementation of algorithms affords wide opportunities for cost savings, productivity gains and freeing officials of routine assignments.[8] An increasingly powerful fleet of computers and ever more intelligent software can handle crazy amounts of data and solve assignments that are too complicated for humans. The public sector has to keep up with the private sector. Among other applications, algorithms may become necessary in public administration to effectively control the use of artificial intelligence in business, such as in automated transactions on the stock exchange.[9]

Smart public administration systems can be classified into the following categories: communication with people,[10] internal activities,[11] preparation of decisions and decision making. In the framework of this article, we are primarily interested in the latter two. In Estonia, for example, the Agricultural Registers and Information Board uses algorithms to analyse satellite imagery to check for compliance with grassland mowing obligations. The Tallinn City Government uses machine vision to measure traffic flows. The Ministry of the Interior wants to automate surveillance with a nationwide network of face and number recognition cameras. The Unemployment Insurance Fund soon hopes to implement artificial intelligence to assess the risk of unemployment.[12]

In the U.S., an algorithm determines family benefits and analyses the risks that may justify separating a child from his or her family.[13] They have also applied algorithms to bar entry into the United States, to approve pre-trial bail, to grant parole, in counter-terrorism, planning inspection visits to restaurants, etc. There are predictions that artificial intelligence will soon be implemented in the fields of airplane pilot licensing, tax refund assessment or the assignment of detainees to prisons.[14] Algorithmic predictive policing is used in both the U.S. as well as Germany to predict the time, place and perpetrator of an offense. The system analyses crime statistics together with camera and drone surveillance records to identify occurrence patterns for certain offenses and uses the information gleaned to direct operational forces. Disputes over the use of algorithms have already reached the highest courts in European countries, such as the French and Dutch Councils of State, regarding such issues as university applications and environmental permits.[15]

2. Technical Background

2.1. Basic Concepts

In order to understand artificial intelligence, we must first unveil some concepts from data science with definitions that are far from unanimous.[16] Nevertheless, we will try to give one potential overview.

Artificial intelligence can be understood as the ability of a computer system to perform tasks commonly associated with the human mind, such as understanding and observing information, communicating, discussing and learning. These features of artificial intelligence must be considered metaphors in the functional sense, because machine “learning” is not actually the same as human learning. Artificial intelligence has many branches: automated decision support, speech recognition and synthesis, image recognition and so on. A robot in our context is an artificial intelligence application – an intelligent system.[17]

Data mining is the process of extracting new knowledge – generalization, data correlation and repeating patterns – from large amounts of data (big data) using statistical methods.[18] Various statistical methods have previously allowed analysts to build mathematical models based on data sets to describe what is happening in nature or society. These can, in turn, help assess and classify new situations and predict the future, such as the weather or criminal recidivism. This becomes particularly effective if the models are built on self-learning (machine learning) algorithms.[19]

An algorithm is an exact set of mathematical or logical instructions, more generally a step-by-step procedure for solving a given problem (e.g. including a cake recipe). The representation of an algorithm in programming language is a computer program. (1) Algorithms where the performance is entirely human-defined are distinguished from (2) algorithms that change their parameters autonomously in the course of learning.[20] The systems that automate the traditional decision making processes in public administration (expert systems) are based on the former. Artificial intelligence applications in public administration are mostly based on learning algorithms (sometimes also on more sophisticated non-learning algorithms).

Machine learning is the process by which an artificial intelligence system improves its service by acquiring or reorganizing new knowledge or skills. It is characterized by using the help of learning algorithms to assess situations or make predictions (e.g. making diagnoses, detecting credit card fraud, predicting crime). There are many machine learning techniques with different characteristics: linear and logistic regression, decision tree, decision forest, artificial neural networks, etc.[21] In the most widespread –supervised learning – the algorithm is first trained using training data, a large amount of data cases where the input (e.g. payment behaviour data) and output (e.g. solvency) values (features) are known.

At a later stage, the application must calculate the output values of new cases based on input data on its own. These can be presented as numerical data (regression) or, for example, as yes/no answers (classification). The core element of a learning algorithm is its optimizing or objective function. This is the mathematical expression of the algorithm’s task, which contains a set of so-called weight parameters.[22] As it learns, the robot looks for possible combinations of weights and chooses the working model that is most appropriate for the future and the one that gives solutions that deviate the least from the relationship given in the training data. These operations are repeated hundreds, thousands or even millions of times.[23]

By automated administrative decisions we mean any administrative decision that is prepared or made using automation. This can be based on simpler or more sophisticated non-learning algorithms (expert systems) as well as on machine learning.[24] For example, land tax statements in Estonia are made entirely according to set rules and require no cleverness on the part of a computer.

An algorithmic administrative decision is more narrowly a decision made with the help of artificial intelligence. Automated administrative decisions can be divided into fully and semi-automated ones. The latter are approved by an official. Sometimes the computer decides, based on certain criteria provided, whether it is able to make a final decision, such as granting a tax refund claim, or if an official must decide.[25] Sometimes the terms automated and algorithmic decisions are used synonymously or often combined. But it must be taken into account that the learning potential of artificial intelligence brings both new opportunity but also problems to public administration.[26]

2.2. The Basic Characteristics of Machine Learning

Self-learning algorithms can handle trillions of data cases, each with tens of thousands of variables. For some time, institutions in Estonian have been collecting data into large data warehouses for analytical purposes.[27] Machine learning doesn’t change the main essence of data analysis, but amplifies it: machine learning (in its current capacity) is only able to discover statistical correlations. These are not causal, natural or legal relationships. Depending on the level of refinement of the model, the output data of machine learning may reflect the real world and anticipate the future with amazing accuracy. However, probability calculations will always retain some rate of error.[28]

Learning algorithms and models created during learning are so sizeable and complex that a human – even an experienced computer scientist or the creator of the algorithm – may not always be able to observe or explain the work of a machine learning application (opacity or the black-box effect). The more efficient the algorithm, the opaquer it is. Individual elements of a sophisticated machine learning system can be tracked, such as individual trees in a decision forest. But this does not allow much to be inferred about the process as a whole. Sometimes the opacity of a system is actually desirable in order to protect personal data or business secrets or to prevent the addressee of a decision from deceiving the algorithm.[29]

Because of the statistical nature of machine learning, very big amounts of data are needed. Unfortunately, or rather, fortunately, there is too little information on terrorist acts, for example, to make accurate estimates.[30] In addition to the quantity of data, high quality and standardization are no less important: accuracy, relevance, organization, compatibility, comprehensiveness, impartiality and, above all, security. This applies to both the training data as well as the operating data used in the actual implementation of the algorithm. All machine learning predictions are based on training data and previous experience. Another golden rule of machine learning is: garbage in, garbage out. Poor data quality can result in a variety of distortions, including a failure to investigate all of the factors affecting assessment because of inability, not considering this important or finding it economically nonessential.[31] At the same time, large numbers of decisions amplify the impact of an error rate on an absolute scale.

Models developed and decisions made through machine learning cannot be completely foreseen or guided.[32] Nonetheless, people – programmers, analysts, data scientists, system developers and, ultimately, the end user – have a huge role and responsibility in the quality of machine learning outcomes. The end result is influenced by all kinds of strategic decisions and fine-tuning: defining the relevant output value (target features),[33] creating an objective function, selecting and developing the type of algorithm, fine-tuning the algorithm to be more cautious or bolder, testing and auditing. We must take into account that two different types of algorithms, both of which might be very precise on their own, may give completely different answers to the same case.[34]

3. Rule of Robots or Smart Rule of Law?

The abovementioned technicalities of machine learning pose significant legal challenges in public administration. Machine learning can produce great results statistically, but in certain cases, a lot can also go wrong.

3.1. Administrative Risks: Digital Delegation and Privatization

The authority of the government can only be exercised by a competent institution. This institution may use automatic devises, such as a traffic light or computer, for this purpose. The more discretion given to the algorithm, the more acute becomes the question of whether the decision is actually subject to the control of the competent institution or whether it is running its own course.[35] In our view, the decision is always formally attributed to the institution using the algorithm and they remain legally responsible for it. But with larger decisions to be made, a substantive problem actually arises: can the institution make the algorithm sufficiently consider all the important details of a decision?[36]

We can assume that the state will have a practical need to delegate the development of its algorithms largely to privately held IT companies. That makes it important that we not lose democratic control over the companies directly managing the algorithm, such as making sure they don’t gain full control over the content of administrative decisions or maximize their profits at the expense of the quality of administrative decisions. Therefore, as we develop our e-government, we have to analyse whether the current public procurement and administrative cooperation laws sufficiently address these risks.[37]

3.2. Impartiality

For decades, people have been hoping that artificial intelligence can help create a bias-free, selfless, comfort zone-free decision maker that treats everyone equally. Unfortunately, the reality of machine learning has shown some serious difficulties with the problem of bias. Artificial intelligence tends to discriminate against some groups of people when the quality of input data or the algorithm itself is inadequate. For example, when some groups have been previously monitored more closely than others, it becomes reflected in the training data (e.g. blacks in predictive policing in the U.S. or in recidivism assessment systems).[38]

3.3. Legal Basis

The Constitution § 3 (1) 1 states in Estonia that governmental authority can solely be exercised pursuant to the law. The question of when and how the legislature should authorize institutions to implement machine learning technology cannot be answered simply or unilaterally. If machine learning is used only in the preparation of administrative decisions (e.g. to forecast a pollutant before issuing an environmental permit) but the final administrative decision is made by a human official following normal procedural rules, then machine learning can be considered one detail of the administrative procedure and control over the decision making remains at the discretion of the administrative institution (Administrative Procedure Act § 5 (1)) thereby not requiring any special provisions.[39]

If the role of the human in decision making is limited to that of a rubber stamp or disappears altogether, then it may be a matter requiring parliamentary approval. In each area (licenses, social benefits, environmental protection, law enforcement, immigration, etc.), the widespread implementation of intelligent systems raises specific issues that need to be resolved separately and balanced with appropriate substantive and procedural guarantees.[40] Aside from the legal issues, it would be wise to consider the risks to public finance: does it make the legislature a slave to the robot? An expensive and complicated implementation system may start to obstruct legislative changes and political will.[41]

The Taxation Act § 462 (1) grants an implementing institution broad powers to make automatic administrative decisions in the field of taxation without intervention by an official. A more detailed list must be established by the Ministry of Finance.[42] The law does not impose restrictions on the type or manner of decisions that can be automated. Due to its rather precise legal definitions, taxation is considered rather suited for automation. Here, a well-founded reliance on a broad mandate shouldn’t produce unacceptable results. However, granting total power of authority[43] to fully automate any administrative decision may result in violations of § 3 (1) and § 14 of the Constitution.

3.4. Supremacy of the Law

Pursuant to § 3 (1) 1 of the Constitution, the exercise of governmental authority may be guided by an algorithm only if the word of the law is followed at all times during its application.[44] But this requires the human or self-learning system to convert the law into an algorithm. In some cases this may be possible in principle, though it would be a substantial task, but that would require the developer to have very in-depth knowledge of information technology, mathematics and the law.[45] However, many legal provisions cannot be described in the unambiguous variables specific to an algorithm.[46] This is due both to the inevitable vagueness of the instrument of law – the human language – as well as the intentional slack that ensures flexibility in legislation.[47]

Instead of step-by-step instructions (conditional programs), the law often uses outcome-orientedfinite programs:[48] general objectives such as improving living environments, public involvement and informing the public, balancing and integrating interests, sufficiency of information, expedient, reasonable and economic land use (Planning Act §§ 8–12); discretionary powers, such as the right of a law enforcement agency to issue a precept to a person liable for public order to counter a threat or eliminate a disturbance (Law Enforcement Act § 28); undefined legal terms, such as overriding public interest (Water Act § 192 (2)) or danger (Law Enforcement Act § 5 (2)); general principles, such as human dignity, proportionality, equal treatment (Constitution § 10, § 11 sentence 2 and § 12).

Due to the uncertainty of the law, legal subsumptions[49] (such as the decisions necessary to implement a law – is an object a building in the sense of the Building Act, is a person a contracting entity in the sense of the Public Procurement Act, whether the recipient of rural support is sustainable, or how to define a goods market in competition supervision) are not mere formal logical acts but require judgement. Before a situation is resolved, the decision maker must interpret the norm to explain whether the legislator wanted to subject the situation to the norm or not. What’s more, the decision must be made in situations that didn’t occur to the legislature, such as a new border tax avoidance scheme. Here, the implementer needs to assess whether he or she is dealing with permissible optimization or abuse (Taxation Act § 84). Those implementing the law – the ministers, officials, judges and contracting parties – continue to interpret it and fill in the gaps in the regulatory process started by parliament. It is up to them to make the law concrete.[50]

We must note that there is some similarity to machine learning here: a learning algorithm is not yet complete in the form that humans created it. It keeps developing itself and is able to create new models to classify situations. So, couldn’t the legislator’s real will not be modelled in this way a well? Is it not a standard classification task for a smart system almost like finding cat pictures? Unfortunately, the source material for machine learning – data from the past – cannot in principle be sufficient to further develop the law as code.[51] A law’s enforcer must also account for existing judicial and administrative practice[52] and the generalizations that crystallize out of it but his or her sources must not be limited only to this.[53]

An official or a judge must be able to perceive, understand and apply a much broader context: the history of the law, the systematics of norms, the objective of this law and the general meaning of justice, but especially the direct and indirect effects of the decision. It is not possible in all fields to produce sufficient quantitative or qualitative data to describe all the layers of law and its operating environment. And it is far from possible for (current) smart systems to follow all of this material in real time. Therefore, many situations require a rational being who understands the peculiarities of the specific situations being regulated and, when necessary, creates a new law appropriate for that situation instead of searching for it in previously tested patterns.[54]

The vagueness of legal concepts expressed in natural language is not a flaw in the law. It must remain possible to argue over the law in order to reach fair decisions in specific situations. But this requires open and honest discussion over different interpretations and ways of assessing the facts. Even if you translate the law into zeros and ones, you don’t escape the need to interpret it. This need would simply move from the decision making stage to (1) the expert system creation and calibration stage or (2) the intelligent system learning stage.[55] In both cases, at least the persons concerned and presumably also the competent administrative institution (considering the complexity of machine learning) lack an effective opportunity to have a say in the interpretation. Because of their complexity, the decisions made by a self-learning algorithm are not just difficult to predict, they are structurally unpredictable.[56]

But how can you ensure that the algorithm won’t deviate from the law as it learns? Periodic testing and auditing is not a sufficient solution because tests are also unable to anticipate or run through all of life’s possible scenarios. The costs of such extensive calibration and testing would eventually outweigh the benefits.[57] Also, the machine language translation of a law that an administrative robot could supposedly follow is anything but static. It is corrected not only by new laws, interpretations in case law[58] and decisions made in constitutional review, but also by the development of the context of the law – society. Weak artificial intelligence is not capable of perceiving or applying these changes itself.

The main question here is not whether and to what extent a machine makes mistakes. A machine doesn’t perform any legal thought operations. In the best case and only with sufficient amounts of data, machine learning (in its current capacity) can merely mimic legal decisions through statistical operations but not comprehend the content of the law or make rational decisions based on it.[59] But that is precisely the demand set by § 3 (1) 1 of the Constitution. We are not claiming that an expert or smart system isn’t able to replace any legal assessment, just that solutions to the above described problems must be found when using such systems.

3.5. Discretionary Power

These problems are exacerbated by discretionary decisions where the law does not prescribe clear instructions, such as whether to require the demolition of a building, what requirements to set for service providers in a procurement, whether and under what conditions to allow extraction in an area with groundwater problems or where to build a landfill. Ostensibly, discretionary power does not give authorities the right to make arbitrary decisions. Discretionary decisions must also obey the general principles of justice, the purpose of the law and all of the relevant facts specific to each individual case (Administrative Procedure Act § 4 (2)).[60]

An algorithm that has been completely defined by humans is not suited to make discretionary decisions because circumstances are unpredictable. True, there is some measure of standardization and generalization in making judgements, such as with internal administrative rules, but officials must retain the right and the duty to deviate from such standards when it comes to atypical cases.[61] However, optimists believe that even though the capability is lacking at the moment, it is not rigid algorithms but machine learning that will be able to take advantage of its dynamic discretionary parameters to soon work within value principles and discretionary bounds.[62]

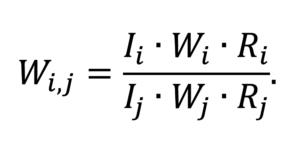

This does not seem realistic in the near future.[63] First of all, decisions of this kind are too unique to be able to create large enough amounts of data for machine learning to be capable of modelling them. Secondly, discretionary rules and the general principles of justice may seem like simple maxims at first glance. They may even be represented as mathematical formulas, but this does not yet guarantee their practical applicability to machine learning. Let us illustrate with R. Alexy’s proportionality formula by trying to explain its application through the example of an injunction to shut down a fish factory infected with a dangerous bacterium.

Here, i and j are the principles considered in making the decision (in this case, fundamental rights: consumer health versus the freedom to conduct business). Wi,j is the specific value of principle i in relation to principle j. In order for the Veterinary and Food Board to issue an injunction, i, or health, must outweigh j, or freedom to conduct business. In other words, Wi,j must be >1. I is the intensity of interference of the give principle, which expresses the extent of potential damage if one or the other principle recedes (consumer illness or death, factory bankruptcy and unemployment for its many workers). W is the principle’s abstract value and illustrates the general importance we attach to public health and freedom to conduct business. R is the probability of damage that could result from violation of this principle (for example, if the factory stays open, their product will not necessarily be contaminated but may be, but if the factory is shut down, bankruptcy is certain).[64]

Even if we are to disregard other criticism of this equation,[65] the real difficulty does not lie in calculations but in ascribing correct values to the variables in the equation and in arguments over if and to what extent one or another principle (fundamental right) is infringed, what are the proven facts and are they even relevant to the judgement to be made. The likelihood of one or another outcome (R) may depend on very special circumstances that did not occur to those inputting the algorithm’s learning and working data. However, judgements made on the importance of principles (W) and the intensity of interference (I) are value-based and can only be made with an acute perception of the sizeable context accumulated over a long period of evolution in law and society. If this information is not easily accessible to the human official, the deficiency can be overcome by communication between the decision maker and the parties to the proceeding in an inclusive administrative proceeding (3.6 below).

The weak machine learning technologies available today and in the near future are characterized by their limitation in understanding context and the content of communication.[66] This applies to both undefined legal concepts (public interest, material harm, etc.).[67] If, for example, the Law Enforcement Act § 5 (2) defines a threat as a sufficiently probable offense (e.g. food poisoning), it still does not quantify the level of sufficient probability. This is a legal judgement that is based on value judgements on the significance of one or another amenity, not just a statistical prognosis of the occurrence of damage.

To fully delegate a complex discretionary or judgement-based decision to an algorithm would, in our opinion, constitute a gross error of discretion against the Code of Administrative Court Procedure § 158 (3) 1 (failure of an administrative institution to exercise discretionary power). An algorithm can, however, be implemented as an aid.

3.6. Fair Proceedings and the Principle of Investigation

Fair proceedings – especially the right to a hearing (Administrative Procedure § 40 (1)) – play an important role in guaranteeing the substance of a decision as well as the dignity of the persons concerned.[68] The establishment and further development of law in a state based on the rule of law must take place in the framework of honest and open (at least to the persons concerned) dialogue. In decisions affecting large numbers of people, such as spatial planning and environmental permits, the right to express an opinion must also be open to the public. Such discussions can merely be mimicked by contemporary algorithms (i.e. debate robots), not actually (meaningfully) held. In the case of machine learning, listening to the discussion would be all the more necessary as the algorithm may not be programmed or have learned to account for unpredictable circumstances. A rare event may turn out to be decisive to the right prediction, like a broken leg means a person won’t do his weekly work-out even if his years of behavioural patterns would indicate otherwise.[69]

Coglianes and D. Lehr point out that the right to a hearing is fairly flexible under U.S. law and that machine learning without a hearing could, in some situations, make more accurate decisions on average than humans through the hearing process.[70] This is not adequate justification. A citizen or business that falls into the margin of error does not have to be allayed by pretty statistics and retains the right to demand a lawful decision on his case. The Administrative Procedure Act § 40 (3) in Estonia provides several exceptions to the right to a hearing. These exceptions can be augmented with special laws if the real effectiveness of a hearing is low. But no general exception to any administrative acts on algorithms can be granted. The larger the discretion of the authority, the more necessary communication becomes to the proceedings and, therefore, the less possibility there is to use fully automated decisions, i.e. when applying artificial intelligence, the person concerned must retain the opportunity to interact with an official.[71]

The effective protection of rights and public interest is guided by the principle of investigation in the Administrative Procedure Act (§ 6) – an administrative institution is obligated to take initiative in investigating all relevant facts. This is also a challenge for algorithms because they cannot deal with circumstances that haven’t been entered into their systems. The reality around us is not yet completely digitized or machine-readable with sensors. Therefore, a machine can only consider fragments of the actual situation in its analysis.[72] But a human is able to take initiative in searching for additional data from sources that have not been provided or are not in their manual.

Not knowing important information does not exonerate the decision maker from erring against a prohibitive norm.[73] This is why German law requires the intervention of a human officer, obliging him or her to manually correct an automated decision in the light of additional circumstances.[74] However, with intelligent implementation, machine learning can be applied to the principle of investigation, such as to select tax returns that need more extensive, manual control.[75]

3.7. Reasoning

The reasoning behind decisions made by governmental authorities is a core element of a fair procedure. According to § 56 of the Administrative Procedure Act, an administrative act must state its legal and factual basis (the provision delegating authority and the circumstances justifying its application) and, if the act is based on discretion, at least the primary motives for choosing between different options (e.g. why the pulp mill should be in Narva and not Tartu or why the construction of a wind farm should be prohibited).

This is not a mere ethical recommendation but a fundamental, constitutional obligation.[76] A law enforcement mandate can only be granted to an entity that can demonstrate that their decision is lawful – in accordance with external limits as well as internal rules of discretion. This brings up an important difference between the private and public sectors: the use of freedoms does not need to be justified but the use of authority does. A person receiving a notice of tax assessment or demolition injunction does not have to accept an official’s claim that “I don’t know why, but the machine made this decision about you.”

Neither the Administrative Procedure Act § 56 nor the Taxation Act § 462 makes exemptions for automatic, including algorithmic, administrative decisions. Such exceptions would violate the Constitution as well as generally accepted standards in democratic, rule of law states.[77] As we have seen, creators of algorithms are often unable to explain the decisions made by a robot due to machine learning opacity. In 2011, Houston in the U.S. used an algorithmic decision making process to terminate employment contracts with teachers. During the ensuing litigation, the school administrator was unable to explain the functioning of the algorithm, claiming that he had no ownership or control over the technology.[78] The U.S. Government has also cited the issue of opacity as a matter of concern.[79]

Some experts see the possibility of solving the problem of opacity by using artificial intelligence to develop language processing programs enough so that the computer can analyse numerous prior justifications to synthesize a machine argument that is seemingly similar legal arguments.[80] This method would still use only statistics, not reasoning based on the methods of jurisprudence, meaning it could only offer an inadmissible semblance. But reasoning must be genuine.[81]

There is a growing search for ways to increase transparency in machine learning based on the principles of accountability and explainability. Among other things, this requires greater access to learning and source data, data processing, algorithms and their learning processes.[82] These challenges collide with business secrets, internal information, personal data protection and, above all, a human’s ability to analyse the work of an algorithm. Moreover, the most important aspect of the reasoning for an administrative act is not its technical description of how the decision was made, but the motives behind why the decision made was this particular one. Even in the case of decisions made by humans, we are not interested in the biochemical details of the decision maker’s brain but in his or her explanations. There is little benefit to expanding the overall transparency of machine learning to the reasoning of individual cases.[83]

As an alternative, development has started on so-called explainable artificial intelligence (xAI). Since the actual mathematical processes of machine learning are too complex and sizeable for humans to productively investigate them directly, developers are trying to employ artificial intelligence for this task as well, such as trying to model complex implementation using a simpler and more comprehensible algorithm.[84] Also, there are methods that try to construct similar fictitious situations where the algorithm gives a different answer. To do this, some variables are ignored or changed (e.g. gender or age) while others are kept constant. This technique can be used to parse out the criteria instrumental to a decision.[85] But this still only takes us half way: it is an explanation of the background of a statistical judgement, not a legal judgement itself. [86] That may suffice if the administrative act is solving a complex but mathematically solvable problem, such as predicting development or the likelihood of an event (e.g. the increase or decrease in the population of a protected species when a railway is built).[87]

Such judgements and predictions can be necessary, but the obligation of justification has a wider berth. In general, an administrative act may need an explanation of why legal provision x is applied and not y; why a statutory provision is interpreted as a and not b; what facts have been ascertained and why; why these facts are pertinent according to the relevant law;[88] why it is necessary to implement a certain measure (e.g. why should a dangerous structure be demolished instead of rebuilt). All of these issues need counter-arguments to the positions held by the parties to the proceeding that were not addressed in the decision. A robot cannot give adequate explanations for these thought operations because it does not perform such operations.[89] If an administrative act requires a substantive legal justification, then the current level of information technology means we need to input a human into the “circuit”.[90]

3.8. Judicial Review

To ensure legality, it is recommendable to subject both private and public sector artificial intelligence applications to multifaceted monitoring (documentation, auditing, certification, standardization).[91] This is necessary but cannot replace the judicial protection of persons who find that their rights may have been violated (Constitution §15 (1), European Convention on Human Rights Art. 6 and 13, Charter of Fundamental Rights of the European Union Art. 47). If sufficient substantive and factual argumentation is given for administrative decisions made using an algorithm, there is no fundamental problem with judicial control. But difficulties arise in the absence of such argumentation. [91a]

Coglianes and D. Lehr point out that courts tend to give deference to agencies when it comes to technically complex issues.[92] But it’s the algorithm that makes the decision complex! Implementing algorithms, however, cannot become a universal magic wand that frees the executive institution from judicial review for any decision. We can only talk of loosening control in situations where judges would defer even without the use of artificial intelligence for other reasons, such as the economic, technical or medical complexity of the content or if the infringement on the rights of affected individuals is not excessively intense. Here, a complex administrative decision may include elements with different control intensity.[93] E. Berman debates this matter. She sees the opportunities for use of algorithms to be proportional to the leeway in discretion. This debate is somewhat confusing because it does not account for the breadth of discretion afforded by the law, or the significant influence of general principles and basic rights. Her ultimate conclusion is that control may be allowed to weaken where infringements are not very grievous and regulation is sparse and it can disappear altogether in situations where no one’s rights are affected (e.g. deciding where to locate police patrols).[94] We can agree with this conclusion. As a general rule, administrative court proceedings retain control of rationality (including proportionality), which requires substantive, administrative decisions that are at least monitored by humans as well as legal justification with the exception of routine, mass decisions.

The problem of control cannot be solved simply by the administrative institution disclosing the content and raw data of the algorithm to the court.[95] To analyse this material, the court would need its own IT knowledge or expert assistance. This is not realistic considering reasonable procedural resources nor in accordance with the constitutional roles given to the branches of power (Constitution § 4). It would mean placing the primary responsibility for the compliance of an algorithm in the hands of the court where § 3 and 14 of the Constitution place it in the hands of the executive power.

There is no need to turn algorithms into direct subjects of judicial control. It is not necessarily important whether the data is distorted or has calibration errors or bias – these deficiencies may not affect the end result. It is also not the responsibility of the appellant to prove such deficiencies when challenging an algorithmic decision. And it is not a reasonable solution to compensate for the complexity of the algorithm with longer appeal times. Algorithmic administrative decisions must also obtain final, conclusive force within a reasonable amount of time.[96] In court, it is important for administrative decisions taken by an algorithm to be legally justifiable.

The executive institution must be convinced of the legality of its decision and the process of forming such a conviction must be traceable without any special knowledge of computer science. This means using a so-called administrative Turing test, meaning that citizens and businesses must not detect any difference in whether a law enforcement decision by the executive institution is made with the help of artificial intelligence or not.[97] An institution using algorithms can implement the help of the algorithm for making a decision if it is able. If not, a representative of reasonable thought – an official – must step in.

4. Conclusion: The Division of Labour Between Kratt and Master

Administrative decisions vary widely in terms of content, legal and factual framework and decision making process. Depending on the field and situation, rigid, standard solutions, generalizations and simplifications may be allowed to a greater or lesser extent in administrative law.[98] There are quite a few routine decisions that are subject to clear rules (e.g. in the areas of social benefits and taxes) and those can be trusted to computers working with non-learning or learning algorithms.[99] It may also make sense to use self-learning algorithms in areas where governmental decisions have a wide breadth and decision making requires a more non-judicial analysis (e.g. determining the locations of police patrols or modelling protected populations).[100] But the important and complex decisions in society (e.g. where to build a railway or whether to build a nuclear power plant) are not routine and cannot be automated, at least not fully, because of a lack of appropriate learning data. These decisions need human judgement.[101]

In situations that fall between those two extremes, it is realistic to expect cooperation between the robot and the official where the scope of each role may vary greatly depending on the field and situation:[102]

- More routine but not quite mechanical administrative decisions that are advantageous and lack negative side effects for the public and which have factual circumstances that are comprehensible to an algorithm can be fully automated administrative decisions. However, the affected party must retain the right to request human review of the decision if desired. From a procedural point of view, it would be conceivable to provide a fully automatic administrative act as the initial act while allowing one month for the person to apply for a manual administrative act, for example.[103]

- Administrative decisions of moderate complexity may require the administrative decision to be approved by an official but here we must avoid the ‘rubber stamp’ phenomenon. The official should first examine the arguments of the parties to the proceeding and the views of other authorities, assess the comprehensiveness and exhaustiveness of the facts on the basis of the investigative principle, and prepare a justification for the administrative act together with a thorough evaluation of his or her choices. As technology advances, there is reason to believe we will be able to use the growing help of machines in making these justifications (to explain the aspects that tipped the scales or to prepare a draft justification or at least the more routine parts of it).

- For factually or legally complex decisions, the weight of the decision must be borne by humans, at least until stronger artificial intelligence is developed,[104] though learning algorithms can be used to evaluate individual elements of those decisions. But officials should take direct statements from witnesses, communicate directly and humanely with the parties to the proceedings and make principled and justified decisions.

With all of these variations, quality machine learning is particularly suited for assisting officials in those areas of their job where they need to make predictions about circumstances or events where humans lack certain knowledge as well (e.g. the likelihood of offenses). But the legal decision (e.g. whether the prediction is sufficient to qualify as the justification for intervention) must be made by a human.[105] Machine learning could also be implemented in very uncertain situations where a decision needs to be made but even officials would have trouble presenting rational justifications (e.g. a long-term environmental impact).[106] In any case, the implementation of machine learning in the performance of administrative tasks requires a sense of responsibility on the part of the institution as well as legal, statistical and IT knowledge at least to the extent necessary to adequately outsource and oversee the development services.[107]

____________________________

* This article presents the personal opinions of its authors and does not reflect the official position of any institution. We are thankful to Associate Professor of Machine Learning Meelis Kull (University of Tartu) ad start-up entrepreneur Jaak Sarv (Geneto OÜ) for their consultations without passing any responsibility for the content of the article over to them.

** Translator’s note: A kratt is a mythological, Estonian creature that comes to life to do its master’s bidding when the devil is given three drops of blood. Today, it is also used as a metaphor for AI and its complexities. See also https://en.wikipedia.org/wiki/Kratt.

[1] Ministry of Economic Affairs and Communications, Eesti riiklik tehisintellekti alane tegevuskava 2019‒2021. (Estonian National Action Plan on Artificial Intelligence 2019-2021.) ‒ https://www.mkm.ee/sites/default/files/eesti_kratikava_juuli2019.pdf; State Chancellery/Ministry of Economic Affairs and Communications. Eesti tehisintellekti kasutuselevõtu ekspertrühma aruanne, 2019, (Report of the Expert Group on the Implementation of Artificial Intelligence in Estonia). p. 36 ‒ https://www.riigikantselei.ee/sites/default/files/riigikantselei/strateegiaburoo/eesti_tehisintellekti_kasutuselevotu_eksperdiruhma_aruanne.pdf. Kratt Project homepage. ‒ https://www.kratid.ee/;

[2] K. Lember. Tehisintellekti kasutamine haldusakti andmisel. (The Use of Artificial Intelligence in Administrative Acts.) – Juridica 2019/10, p. 749; K. Lember. Tehisintellekti kasutamine haldusakti andmisel. (The Use of Artificial Intelligence in Administrative Acts.) Master’s thesis, Tartu 2019.

[3] Taxation Act § 462; Environmental Charges Act § 336; Unemployment Insurance Act § 23 (4). See also the Minister of Finance’s Regulation No. 15 from 14.03.2019; the Minister of the Environment’s Regulation No. 34 from 20.06.2011.)

[4] Eesti riiklik tehisintellekti alane tegevuskava (Estonia’s National Strategy for Artificial Intelligence) 2019‒2021, p. 9 ‒ https://www.mkm.ee/sites/default/files/eesti_kratikava_juuli2019.pdf.

[5] M. Mets. „Töötukassas hakkab sulle robot hüvitist määrama, kui sa töötuks jääd“. (A Robot Will Determine Your Unemployment Benefits If You Lose Your Job.) – Geenius 7.02.2019; Homme makstakse 79 370 inimesele välja üksi elava pensionäri toetus. (Tomorrow 79,370 Single Pensioner Benefits Will Be Paid.) ‒ Maaleht, 4.10.2018.

[6] K. Leetaru. A Reminder That Machine Learning Is About Correlations Not Causation. – Forbes 15.01.2019. See also Critical Approaches to Risk Assessment in Early Releases, Circuit Court Decision 1-09-14104.

[7] See in particular: The General Regulation on the Protection of Personal Data (GDPR; European Parliament and the Council of the European Union Regulation (EL) 2016/679) Art. 22 pp 1, 2 par. „b“.

[8] For example, C. Coglianese, D. Lehr. Regulating by Robot: Administrative Decision Making in the Machine-Learning Era. ‒ Geo. L. J. 105 (2017), pp. 1147, 1167 ff.

[9] C. Coglianese. Optimizing Regulation for an Optimizing Economy. – University of Pennsylvania Journal of Law and Public Affairs 4 (2018), p. 1.

[10] Chatbots have been tested around the world, for example, for taking testimony from border crossers and asylum seekers, J. Stoklas. Bessere Grenzkontrollen durch Künstliche Intelligenz. ‒ Zeitschrift für Datenschutz (ZD-Aktuell) 2018, 06363; https://www.iborderctrl.eu/The-project; Harvard Ash Center for Democratic Governance and Innovation. Artificial Intelligence for Citizen Services and Government (2017), p. 7; A. Androutsopoulou et al. Transforming the communication between citizens and government through AI-guided chatbots. ‒ Government Information Quarterly 36 (2019), p. 358 ff. ‒ https://doi.org/10.1016/j.giq.2018.10.001. Cf. About the Statistics Estonia chatbot: https://www.kratid.ee/statistikaameti-kasutuslugu.

[11] In Estonia, for example, the National Heritage Board plans to use kratts for museum inventory, https://www.muinsuskaitseamet.ee/et/uudised/kratt-salli-muudab-muuseumiinventuurid-kiiremaks-ja-mugavamaks. And elsewhere there has been hope expressed that artificial intelligence will begin to pre-sort and distribute applications and requests received by authorities, L. Guggenberger. Einsatz künstlicher Intelligenz in der Verwaltung. ‒ NVwZ 2019, p. 844, 849.

[12] https://www.kratid.ee/kasutuslood; Estonian Unemployment Insurance Fund. Õlitatud masinavärk: Kuidas tehisintellekt kogu Töötukassa tegevust juhib? (Estonian Unemployment Insurance Fund. A Well-Oiled Machine: How Artificial Intelligence Runs All the Activities of the Unemployment Insurance Fund.) – Geenius 08.01.2020; Siseturvalisuse programmi 2020–2023 kavand kooskõlastamiseks (Internal Security Program 2020-2023 Draft for Approval) (14.11.2019). ‒ http://eelnoud.valitsus.ee/main/mount/docList/184b2e57-2c40-4e41-b5c2-e2e24ab71c30#lb1Z2BiP.

[13] S. Valentine. Impoverished Algorithms: Misguided Governments, Flawed Technologies, and Social Control. ‒ Fordham Urb. L. J. 46 (2019), pp. 364, 367.

[14] E. Berman. A Government of Law and Not of Machines. – Boston Univ. L. Rev 98 (2018), pp. 1290, 1320; C. Coglianese, D. Lehr (cit. 8), p. 1161; C. Coglianese, D. Lehr. Transparency and Algorithmic Governance. – Adm. L. Rev. 71 (2019), pp. 1, 6 ff.

[15] T. Rademacher. Predictive Policing im deutschen Polizeirecht. – AöR 142 (2017), p. 366; L. Guggelberger (cit. 11), pp. 848–849; K. Lember. – Juridica 2019 (cit. 2), pp. 750–751; Conseil d’État 427916: Parcoursup.

[16] Estonian data science community ‒ http://datasci.ee/post/2017/05/25/neli-sonakolksu-masinope-tehisintellekt-suurandmed-andmeteadus/.

[17] M. Herberger. „Künstliche Intelligenz“ und Recht. ‒ NJW 2018, p. 2826; H. Surden. Machine Learning and Law. – Wash. L. Rev. 89 (2014), pp. 87, 89.

[18] For example if 2/3 of less than 5 year old Land Cruiser owners make less than 1200 euros per month, in the context of national monitoring. U. Lõhmus, Õigusriik ja inimese õigused. (The Rule of Law and Human Rights.) – Tartu, 2018, p. 121. Cf. D. Lehr, P. Ohm. Playing with the Data: What Legal Scholars Should Learn About Machine Learning. – U. C. Davis L. Rev. 51 (2017), pp. 653, 672; L. Guggenberger (cit. 11), p. 848. Data mining allows, among other things, for effective profile analysis (GDPR, Art. 4, Par. 4), but data mining may not be limited to the analysis of personal data.

[19] E. Berman (cit. 14), pp. 1277, 1279–1280, 1284, 1286; W. Hoffmann-Riem. Verhaltenssteuerung durch Algorithmen – Eine Herausforderung für das Recht. – AöR 142 (2017), pp. 1, 7‒8; Gesellschaft für Informatik. Technische und rechtliche Betrachtungen algorithmischer Entscheidungsverfahren. Studien und Gutachten im Auftrag des Sachverständigenrats für Verbraucherfragen. Berlin: Sachverständigenrat für Verbraucherfragen 2018, p. 30 – http://www.svr-verbraucherfragen.de/wp-content/uploads/GI_Studie_Algorithmenregulierung.pdf.

[20] Among many others, M. A. Lemley, B. Casey. Remedies for Robots. – Univ. of Chicago L. Rev. 86 (2019), pp. 1311, 1312; M. Finck, Automated Decision-Making and Administrative Law, found in: P. Cane et al. (ed.), Oxford Handbook of Comparative Administrative Law, Oxford: Oxford University Press (to be published) ‒ https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3433684 (2019), p. 2.

[21] Cf. M. Koit, T. Roosmaa. Tehisintellekt, (Artificial Intelligence.) Tartu: Tartu Ülikool 2011, p. 194; Estonian Data Science Community. ‒ http://datasci.ee/post/2017/05/25/neli-sonakolksu-masinope-tehisintellekt-suurandmed-andmeteadus/; E. Berman (cit. 14), pp. 1279, 1284–1285; D. Lehr, P. Ohm (cit. 18), p. 671; Gesellschaft für Informatik (cit. 19), p. 30.

[22] Instead of a man-made program, the “decision rule” for an intelligent system is thus a mathematical probability mass function, T. Wischmeyer. Regulierung intelligente Systeme. – AöR 143 (2018), pp. 1, 47. A probability mass function indicates the probability that the (random) search value is equal to a certain value, such as getting a six when you roll the dice, https://en.wikipedia.org/wiki/Probability_mass_function. A generalization of the formula for the simplest self-learning model – a linear regression – looks like this:

Where y is the desired output variable, which is calculated based on the input x1, …, xn, taking into account the weight parameters w1, …, wn that are recalibrated during learning. A linear regression can, for example, predict the value of real estate if you know the square meters, numbers of rooms and distance from downtown. The actual mathematics of self-learning algorithms are more complex. They are based on multidimensional vectors and complex models that combine a large number of regression equations. For example, in artificial neural networks, the structure of the equation mimics the neural connections in a human brain, Gesellschaft für Informatik (cit. 19), pp. 31, 34.

[23] Gesellschaft für Informatik (cit. 19), p. 31; M. A. Lemley, B. Casey (cit. 20), pp. 1324–1325; C. Coglianese, D. Lehr (cit. 14), p. 15; T. Wischmeyer (cit. 22), p. 12; D. Lehr, P. Ohm (cit. 18), p. 671; J. Cobbe. Administrative Law and the Machines of Government: Judicial Review of Automated Public-Sector Decision-Making. – Legal Studies 39 (2019), p. 636. In the case of unsupervised machine learning, output data is not used and the algorithm has to find the correlation in the data itself, A. Berman (cit. 14), p. 1287.

[24] Compare to: K. Lember. Master’s thesis (cit. 2), pp. 13–14.

[25] See also M. Mets (ref 5) on the Estonian Unemployment Insurance Fund decision.

[26] A. Guckelberger. Öffentliche Verwaltung im Zeitalter der Digitalisierung, Baden-Baden: Nomos 2019, p. 484 ff.

[27] The data warehouse of the Police and Border Guard Board has been deemed a world-class system for analysis, https://issuu.com/ajakiri_radar/docs/radar_19/14. See more on the Unemployment Insurance Fund warehouse: „Maksuamet ühise IT-süsteemiga rahul“. (Unemployment Fund Happy with Joint IT System.) ‒ Äripäev, 9.05.2003.

[28] W. Hoffmann-Riem. Rechtliche Rahmenbedingungen für und regulative Herausforderungen durch Big Data, teoses: W. Hoffmann-Riem (toim). Big Data – Regulative Herausforderungen, Nomos: Baden-Baden 2018, p. 20; W. Hoffmann-Riem (cit. 19), p. 13; M. Finck (cit. 20), pp. 2, 11; T. Wischmeyer (cit. 22), pp. 10, 13–14, incl.cit. 48, pp. 17–18, 24; C. Coglianese, D. Lehr (cit. 8), pp. 1156–1159.

[29] A. Deeks. The Judicial Demand for Explainable Artificial Intelligence. – Columbia L. Rev. 119 (2019), pp. 1829, 1834; M. Finck (cit. 20), p. 9; C. Coglianese, D. Lehr (cit. 14), p. 17; J. Burrell. How the machine „thinks“: Understanding opacity in machine learning algorithms. – Big Data & Society 3 (2016) 1, p. 3; J. Tomlinson, K. Sheridan, A. Harkens. Proving Public Law Error in Automated Decision-Making Systems. – https://ssrn.com/abstract=3476657 (2019), p. 10. For example, the Sharemind technology was developed in Estonia for the secure analysis of personal data, i.e. to enhance the black-box effect, D. Bogdanov. Sharemind: programmable secure computations with practical applications. Tartu Ülikooli Kirjastus 2013.

[30] D. Lehr, P. Ohm (cit. 18), p. 678; T. Wischmeyer (cit. 22), pp. 16, 33.

[31] Tehisintellekti ekspertrühma aruanne (Report of the Artificial Intelligence Expert Group) (cit. 1), p. 19; C. Coglianese, D. Lehr (cit. 8), p. 1157; D. Lehr, P. Ohm (cit. 18), p. 681; E. Berman (cit. 14), p. 1302;. C. Weyerer, P. F. Langer. Garbage In, Garbage Out: The Vicious Cycle of AI-Based Discrimination in the Public Sector. – Proceedings of the 20th Annual International Conference on Digital Government Research (2019), p. 509 ff.

[32] C. Coglianese, D. Lehr (cit. 8), p. 1167.

[33] For example, in predicting recidivism, we are interested in the likelihood of a new crime occurring in three, five or ten years or which variable should be used to measure the best candidate for office during in a public competition.

[34] E. Berman (cit. 14), pp. 1305 ff.; 1325 ff.; D. Lehr, P. Ohm (cit. 18), p. 669 ff.; J. Burrell (cit. 29), p. 7.

[35] C. Coglianese, D. Lehr (cit. 14), p. 32 ff.

[36] See 3.5 below for more on these risks, cf. M. Schröder. Rahmenbedingungen der Digitalisierung der Verwaltung. – Verwaltungsarchiv 110 (2019), pp. 328, 347.

[37] W. Hoffmann-Riem (cit. 19), pp. 24–25; A. Guckelberger (cit. 26), p. 410; C. Krönke, Vergaberecht als Digitalisierungsfolgenrecht. Zugleich ein Beitrag zur Theorie des Vergaberechts. – Die Verwaltung 52 (2019), p. 65; M. Finck (cit. 20), p. 10.

[38] E. Berman (cit. 14), p. 1326; T. Wischmeyer (cit. 22), p. 26 ff.; M. Finck (cit. 20), p. 11; L. Guggenberger (cit. 11), p. 847; H. Steege. Algorithmenbasierte Diskriminierung durch Einsatz von Künstlicher Intelligenz. – MultiMedia und Recht 2019, p. 716.

[39] Cf. M. Schröder (cit. 36), p. 343. The same goes for Australia, M. Finck (cit. 20), p. 18.

[40] T. Wischmeyer (cit. 22), pp. 7–8, 41. Article 22 of the GDPR also allows fully automated decisions in processing personal data only as an exception – for the purposes of fulfilling a contract, in cases stipulated by the law or with the person’s consent.

[41] An example is the SKAIS2 information system saga of the Social Insurance Board: https://www.err.ee/613092/skais2-projekti-labikukkumise-kronoloogia.

[42] Legislation with a much narrower scope includes: Environmental Charges Act § 336 (1, 3), Unemployment Insurance Act § 23 (4). See also cit. 63 below in regards to discretionary authority.

[43] Compare to the proposed supplement to the Administrative Procedure Act § 51 (1): “An administrative institution may issue an administrative act or document automatically, without any direct intervention by a person acting on behalf of the administrative institution (henceforth: automated administrative act and document)”, T. Kerikmäe et al., Identifying and Proposing Solutions to the Regulatory Issues Needed to Address the Use of Autonomous Intelligent Technologies, Phase III Report (2019), pp. 6–7.

[44] Bundesministerium des Inneren. Automatisiert erlassene Verwaltungsakte und Bekanntgabe über Internetplattformen – Fortentwicklung des Verfahrensrechts im Zeichen der Digitalisierung: Wie können rechtsstaatliche Standards gewahrt werden? – NVwZ 2015, pp. 1114, 1116–1117.

[45] Because of their precision, most traffic laws can probably be taught to self-driving cars but there are also dilemmas that come up in traffic that do not have a determinate answer, See also M. A. Lemley, B. Casey (cit. 20), pp. 1311, 1329 ff. It is also difficult to teach a machine to make exceptions to rules, such as driving through a red light, Ibid. p. 1349.

[46] E. Berman (cit. 14), p. 1329; A. Guckelberger (cit. 11), pp. 367–370. Compare to: M. Maksing. Kohtupraktika ühtlustamise võimalustest infotehnoloogiliste lahenduste abil. (The Possibilities for Harmonizing Case Law Using IT Solutions). Master’s thesis, Tallinn: Tartu Ülikool 2017, p. 25.

[47] L. Reisberg. Semiotic model for the interpretation of undefined legal concepts and filling legal gaps, Tartu: Tartu Ülikooli Kirjastus 2019, pp. 170–173; K. Larenz, C.-W. Canaris. Methodenlehre der Rechtswissenschaft, Berlin: Springer 1995, p. 26; K. N. Kotsoglou. Subsumtionsautomat 2.0: Über die (Un-)Möglichkeit einer Algorithmisierung der Rechtserzeugung. – JZ 2014, pp. 451, 452–454; R. Narits. Õiguse entsüklopeedia, Tallinn: Tartu Ülikool 1995, p. 68 ff.; R. Narits. Õigusteaduse metodoloogia I, Tallinn, 1997, p. 84 ff.

[48] See more on this distinction: N. Luhmann. Das Recht der Gesellschaft, Frankfurt am Main: Suhrkamp 2018, p. 195 ff.

[49] A decision whether the vital aspects of a case meet the presumptive preconditions for application stipulated by a norm, R. Narits. Õiguse entsüklopeedia, Tallinn: Tartu Ülikool 1995, p. 73.

[50] In the example of the general requirements for agricultural animals according to the Decision of the Administrative Law Chamber of the Supreme Court 3-15-443/54, p 12.

[51] Cf. C. Coglianese, D. Lehr (cit. 14), p. 14; E. Berman (cit. 14), p. 1351; K. N. Kotsoglou (cit. 47), p. 453; M. Herberger (cit. 17), p. 2829.

[52] Moreover, it must be noted that even if someone were able to convert all jurisprudence into algorithmic learning data, it must also first be interpreted because judgements are written in natural language.

[53] This would be acceptable from the standpoint of legal certainty but unacceptable from a fairness standpoint, compare to: A. Kaufmann. Rechtsphilosophie, München: Beck 1997, p. 122.

[54] E. Berman (cit. 14), p. 1315; Cf. F. Pasquale. A Rule of Persons, Not Machines: The Limits of Legal Automation. – G. Wachington L. Rev. 87 (2019), pp. 1, 48; Cf. A. Adrian. Der Richterautomat ist möglich – Semantik ist nur eine Illusion. – Rechtstheorie 48 (2017), pp. 77, 87; P. Enders. Einsatz künstlicher Intelligenz bei juristischer Entscheidungsfindung. – JA 2018, pp. 721, 726.

[55] E. Berman (cit. 14), p. 1331. In the natural language processing systems already being tested at A. Adrian’s lab that are capable of finding statistical correlations between court judgments and scientific articles or other legal “language equivalent” texts, (cit. 54), p. 188 ff. In our view, this is not enough for a rational application of the law.

[56] T. Wischmeyer (cit. 22), p. 334.

[57] E. Berman (cit. 14), p. 1338.

[58] Kaalutlusõiguse kontekstis (The Context of Discretionary Law) K. Lember, Master’s thesis (cit. 2), p. 53.

[59] A computer processes legal texts as data, not as information, K. N. Kotsoglou, JZ 2014, p. A. Adrian argues that humans also merely pretend to understand the meaning of natural language and semantics are merely an illusion, which means replacing the human with another computer does not pose fundamental problems (2017), p. 91. The scope of this article does not allow us to analyze these philosophical claims. We assume that humans are conscious and capable of understanding sentences, including legal provisions, in natural language, and that they can associate these with their own consciousness.

[60] See also K. Merusk, I. Pilving. Halduskohtumenetluse seadustik. Kommenteeritud väljaanne, (Code of Administrative Court Procedure. Annotated Edition) Tallinn: Juura 2013, § 158 com. F.

[61] Decision of the Administrative Law Chamber of the Supreme Court 3-3-1-72-13, par. 21; and 3-3-1-81-07, par. 13-14. Cf. M. Schröder (cit. 36), p. 333; A. Guckelberger (cit. 26), p. 373.

[62] See also L. Guggenberger’s sources (cit. 11), p. 848.

[63] When writing § 462 of the Taxation Act, its authors did not consider it possible to use automated administrative acts in the case of discretion. Explanatory report (675 SE) p. 36 to the Taxation Act amendments and other laws. The text of the law, however, does not mention this restriction.

[64] See: R. Alexy. Constitutional Rights, Proportionality, and Argumentation. Public lecture at the University of Tartu, December 10, 2019. ‒ https://www.uttv.ee/naita?id=29148; T. Mori. Wirkt in der Abwägung wirklich das formelle Prinzip? Eine Kritik an der Deutung verfassungsgerichtlicher Entscheidungen durch Robert Alexy. – Der Staat 58 (2019), pp. 555, 561.

[65] T. Mori, for example, gives examples of case law where this formula isn’t applicable, p. 562.

[66] Cf. C. Coglianese, D. Lehr (cit. 8), p. 1218; J. Cobbe. Administrative Law and the Machines of Government: Judicial Review of Automated Public-Sector Decision-Making (2018). ‒ https://ssrn.com/abstract=3226913, p. 20; A. von Graevenitz, „Zwei mal Zwei ist Grün“ – Mensch und KI im Vergleich, ZRP 2018, pp. 238, 240. See also Ü. Madise, Põhiseaduse vaimust ja võimust muutuvas ühiskonnas, (The Spirit and Power of the Constitution in a Changing Society) found in: T. Soomre (toim.), Teadusmõte Eestis IX. Teadus ja ühiskond, 2018, p. 138. On value judgements: R. Narits. Eesti õiguskord ja väärtusjurisprudents. (Estonian Legal Order and Value Jurisprudence). – Juridica 1998/1, p. 2.

[67] Nii ka A. Guckelberger (cit. 26), p. 389 ff.; T. Wischmeyer (cit. 22), pp. 17‒18. Cf. A. Berger, Der automatisierte Verwaltungsakt. ‒ NVwZ 2018, pp. 1260, 1263.

[68] On this, see examples: E. Andresen, V. Olle, found in: J. Sootak (ed.). Õigus igaühele, Juura: Tallinn 2014, pp. 149–150; I. Pilving, found in: A. Aedmaa et al.. Haldusmenetluse käsiraamat. Tartu: Tartu Ülikooli Kirjastus 2004, p. 215 ff. On automated processing of personal data: GDPR p 71 par 1.

[69] E. Berman (cit. 14), p. 1323; A. Guckelberger (cit. 26), p. 396.

[70] Cit. 8, p. 1186.

[71] The same in 675 SE Explanatory Report (cit. 63), p. 36. See also: Decision of the Administrative Law Chamber of the Supreme Court 3-3-1-76-14, p 14 on disciplinary action.

[72] T. Rademacher (cit. 15), 383; A. Adrian (cit. 54), pp. 77, 86.

[73] Concerning prohibitions on procurement agreements, Decision of the Administrative Law Chamber of the Supreme Court 3-3-1-7-17, p 11. In the case of discretion, ignorance can be an excuse if the person had the opportunity to inform the authority of it – concerning deportation, Decision of the Administrative Law Chamber of the Supreme Court 3-18-1891/46, p 19.

[74] § 24 (1) 3 Verwaltungsfahrensgesetz (VwVfG). Selle kohta F. Kopp/U. Ramsauer, Verwaltungsverfahrensgesetz. Kommentar, München: Beck 2019, § 24 änr 8; L. Guggenberger (cit. 11), p. 847.

[75] M. Belkin. Maksuamet hakkab tehisintellekti abiga ümbrikupalga maksjaid püüdma. (The Taxation Board Will Use Artificial Intelligence to Find Envelope Wages) – Geenius 06.01.2020.

[76] The obligation of reasoning is found in the Constitution § 13 (2 ff.) and § 15 (1) 1 ff., as well as in CFR art. 41 par. 2 ff. See more: N. Parrest, teoses: A. Aedmaa et al. (cit. 68), p. 299 ff. Above all else, in the event of total opacity, wider public support for machine decisions is unlikely as this rather evokes suspicions of manipulation or even of a “deep state”, See M. Herberger (cit. 17), p. 2828.

[77] Germany: § 39 VwVfG, France: art. L211-5, Code des Relations entre le Public et l’Administration; United Kingdom: House of Lords: R v. Secretary of State for the Home Department, ex parte Doody (1993) 3 All E.R. 92; Euroopa Liit: TFEU art 296 par. 2; ECHR art. 6 on EIKo 13616/88: Hentrich/France (1994), p 56; H. Palmer Olsen et al.. What’s in the Box? The Legal Requirement of Explainability in Computationally Aided Decision-Making in Public Administration (2019). ‒ https://ssrn.com/abstract=3402974, p. 22.

[78] United States District Court, S.D. Texas, Houston Division: Hous. fed’n of Teachers. – 251 F. Supp. 3d (2017), p. 1168. Nor has it been possible to explain algorithms that suggest police patrols stop and check certain persons. For examples, see S. Valentine (cit. 13), pp. 372–373, 367.

[79] C. Cath et al.. Artificial Intelligence and the ’Good Society’: the US, EU, and UK approach. ‒ Science and Engineering Ethics 24 (2017), p. 9.

[80] H. Palmer Olsen et al. (cit. 77), pp. 23–24. In Japan, for example, artificial intelligence is helping Members of Parliament prepare responses to citizens’ memoranda, Harvard Ash Center (cit. 10), p. 8. IBM väitlusroboti kohta, (About the IBM Debate Robot) K. Lember. Master’s thesis (cit. 2), p. 38. See more about this method: F. Pasquale (cit. 54), p. 49 ff.

[81] Decision of the Administrative Law Chamber of the Supreme Court 3-3-1-29-12, p 20. There is no reason to exclude the use of texts drafted with such a method if an official checks the draft substantially and carefully. See also Ibid. Decision 3-17-1110/84, p 18, where the college explains that the reasoning for an administrative decision must not be limited to a mechanical copy of the norms.

[82] Additional citations: M. Herberger (cit. 17), pp. 2827–2828; K. Lember. Master’s thesis (cit. 2), p. 36; also GDPR par. 71.

[83] L. Edwards, M. Veale. Slave to the Algorithm: Why a Right to an Explanation Is Probably Notthe Remedy You Are Looking for. ‒ Duke L. & Tech. Rev. 16 (2017–2018), pp. 18, 43, 56, 67; E. Berman (cit. 14), pp. 1316–1317; W. Hoffmann-Riem (cit. 28), p. 60. On restrictions to prison working methods: Tartu Circuit Court 3-16-418, p 12.

[84] A. Deeks (cit. 29), p. 1834; E. Berman (cit. 14), p. 1317; L. Edwards, M. Veale (cit. 83), p. 61 ff.; M. Finck (cit. 20), p. 15. Among others see: A. Adadi, M. Berrada. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). – IEEE Access 2018/6, p. 52138 ff. ‒ https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=8466590.

[85] C. Coglianese, D. Lehr (cit. 14), p. 52; Deeks (cit. 29), p. 1836; T. Wischmeyer (cit. 22), p. 61–62.

[86] L. Guggenberger (cit. 11), p. 849; K. D. Ashley. Artificial Intelligence and Legal Analytics: New Tools for Law Practice in the Digital Age. Cambridge University Press 2017, p. 3.

[87] This may be important in determining bias, but bias is far from the only aspect to consider when reviewing an administrative decision.

[88] The logical linking of so-called factual and legal reasoning using the example of restrictions to market trading: Decision of the Administrative Law Chamber of the Supreme Court 3-3-1-66-03, p 19.

[89] K. Lember. Master’s thesis (cit. 2), p. 53.

[90] See below for possible models p. 4.

[91] W. Hofmann-Riem (cit. 28), p. 60 ff.

91a Unjustified, semi-automatic threat assessments used for parole decisions have been “doomed” by the courts, stating that they “mean nothing in the eyes of the court”, Tartu Circuit Court 1-13-8065, p 26, Tartu County Court 1-13-7295, p 14.

[92] C. Coglianese, D. Lehr (cit. 14), p. 44.

[93] For example, when assessing the danger of a foreign repeat offender, the police does not have any room for uncontrolled evaluation if they plan to issue that person an expulsion order. Danger assessment is not only a statistical prognosis of a new offense, but also a legal evaluation based on that prognosis – whether it meets the legislator’s perception of a quantifiably undefined threshold. However, judicial review is limited in considering consequences (whether to issue an injunction, how long to refuse access), Decision of the Administrative Law Chamber of the Supreme Court 3-17-1545/18, p 28. See also I. Pilving. Kui range peab olema halduskohus? (How Strict Must an Administrative Court Be?) – RiTo 2019/39, pp. 61, 64 ff.

[94] Cit. 14, pp. 1277, 1283.

[95] M. Schröder (cit. 36), p. 342. Where necessary, the court must have access to this information, irrespective of the interest of protecting business secrets. But the court may restrict access of the other parties to information containing business secrets. (Code of Administrative Court Procedure § 88 (2)).

[96] Cf. however J. Cobbe (cit. 66), p. 8.

[97] H. Palmer Olsen et al. (cit. 77), p. 23.

[98] T. Wischmeyer (cit. 22), p. 34.

[99] A. Guckelberger (cit. 26), pp. 386–387.

[100] E. Berman (cit. 14).

[101] C. Coglianese, D. Lehr (cit. 14), p. 30; C. Coglianese, D. Lehr (cit. 8), p. 1214.

[102] Also: A. Guckelberger (cit. 26), p. 386.

[103] Art L311-3-1 par. 2, code des relations entre le public et l’administration. We also see it as a possible solution in K. Lember. Master’s thesis (cit. 2), p. 40.

[104] T. Wischmeyer (cit. 22), p. 41.

[105] Practical experience so far shows that officials play a decisive role in predictive decisions, T. Rademacher (cit. 15), pp. 378, 384.

[106] Cf. A. Vermeule. Rationally Arbitrary Decisions in Administrative Law. – The Journal of Legal Studies 44 (2015), p. 475.

[107] C. Coglianese, D. Lehr (cit. 14), p. 30.